Allora — How Blockchain Solves AI Long Tail Problem

A Focus on Allora's Structure and Vision

1. Introduction

Since the emergence of generative AI represented by ChatGPT, AI technology has been rapidly advancing with increased corporate participation and investment in the AI industry. Recent AI shows excellent performance not only in generating specific outputs but also in large-scale data processing, pattern recognition, statistical analysis, and predictive modeling, leading to expanded AI adoption across various industries.

- JPMorgan Chase: Hired over 600 ML engineers and developed/implemented more than 400 AI technology use cases including algorithmic trading, fraud prediction, and cash flow forecasting

- Walmart: Analyzes seasonal and regional sales history to predict product demand and optimize inventory

- Ford: Analyzes vehicle sensor data to predict parts failures and notify customers, preventing accidents caused by part failures

Recently, there has been an increasing trend of combining blockchain ecosystems with AI, with particular attention being paid to the DeFAI sector, where DeFi protocols are combined with AI. Furthermore, there are increasing cases of directly incorporating AI into protocol operating mechanisms, which enables efficient risk prediction and management of DeFi protocols and introduces new types of financial product services that were previously unavailable.

However, building AI models specialized for specific functions currently remains monopolized by large corporations and AI specialists due to high entry barriers, including vast amounts of training data and specialized AI technology.

As a result, other industries and small startups face significant difficulties in adopting AI, and blockchain ecosystem dApps are not exempt from these constraints. Additionally, since dApps must maintain the core value of "trustlessness" - not requiring trust in third parties - the development of decentralized AI infrastructure is necessary for more protocols to reliably adopt AI and provide services that users can trust.

Against this background, Allora aims to implement a self-improving decentralized AI infrastructure and support dApps and startups wishing to integrate AI into their services securely.

2. Allora, A Decentralized Inference Synthesis Network

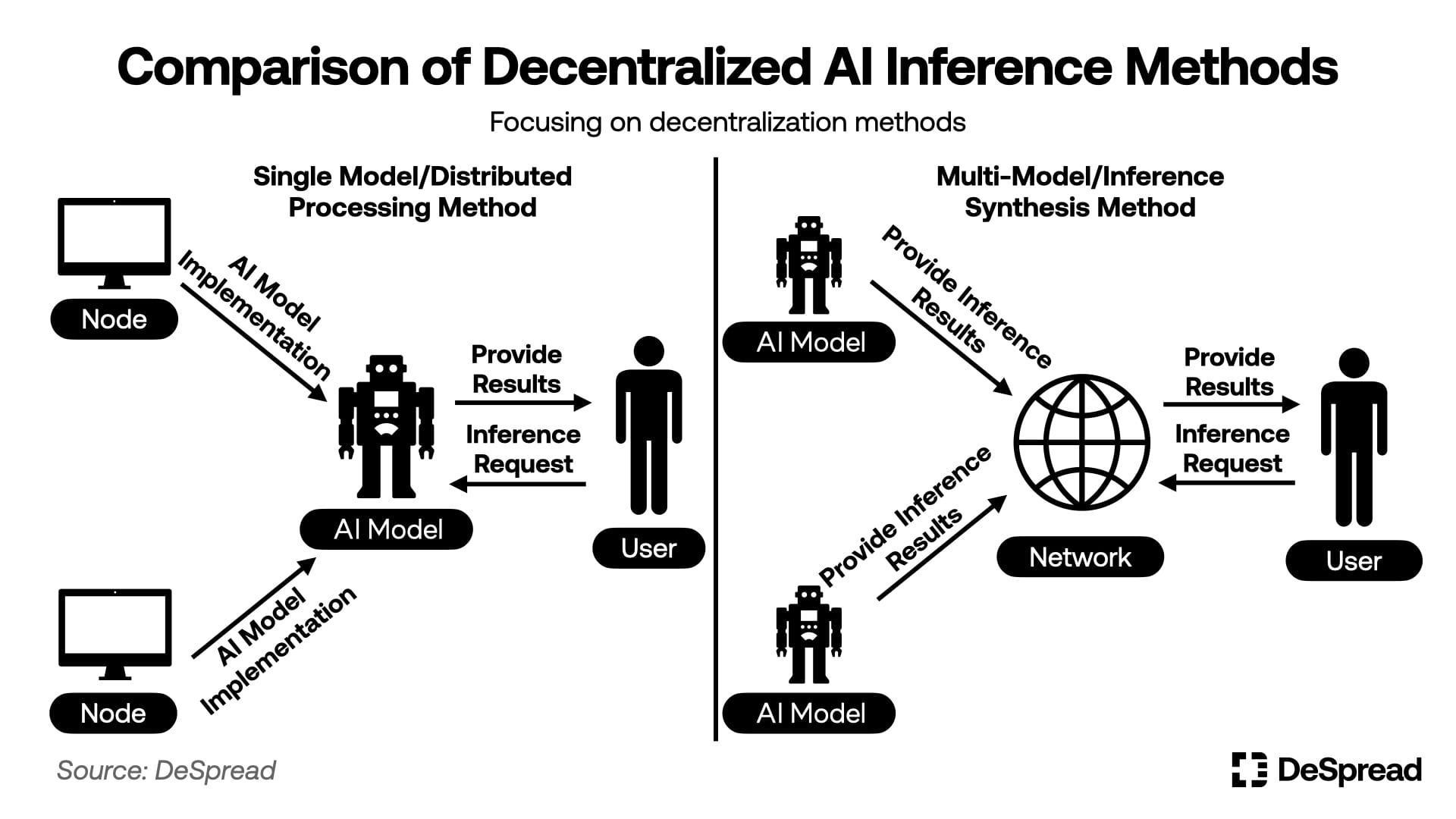

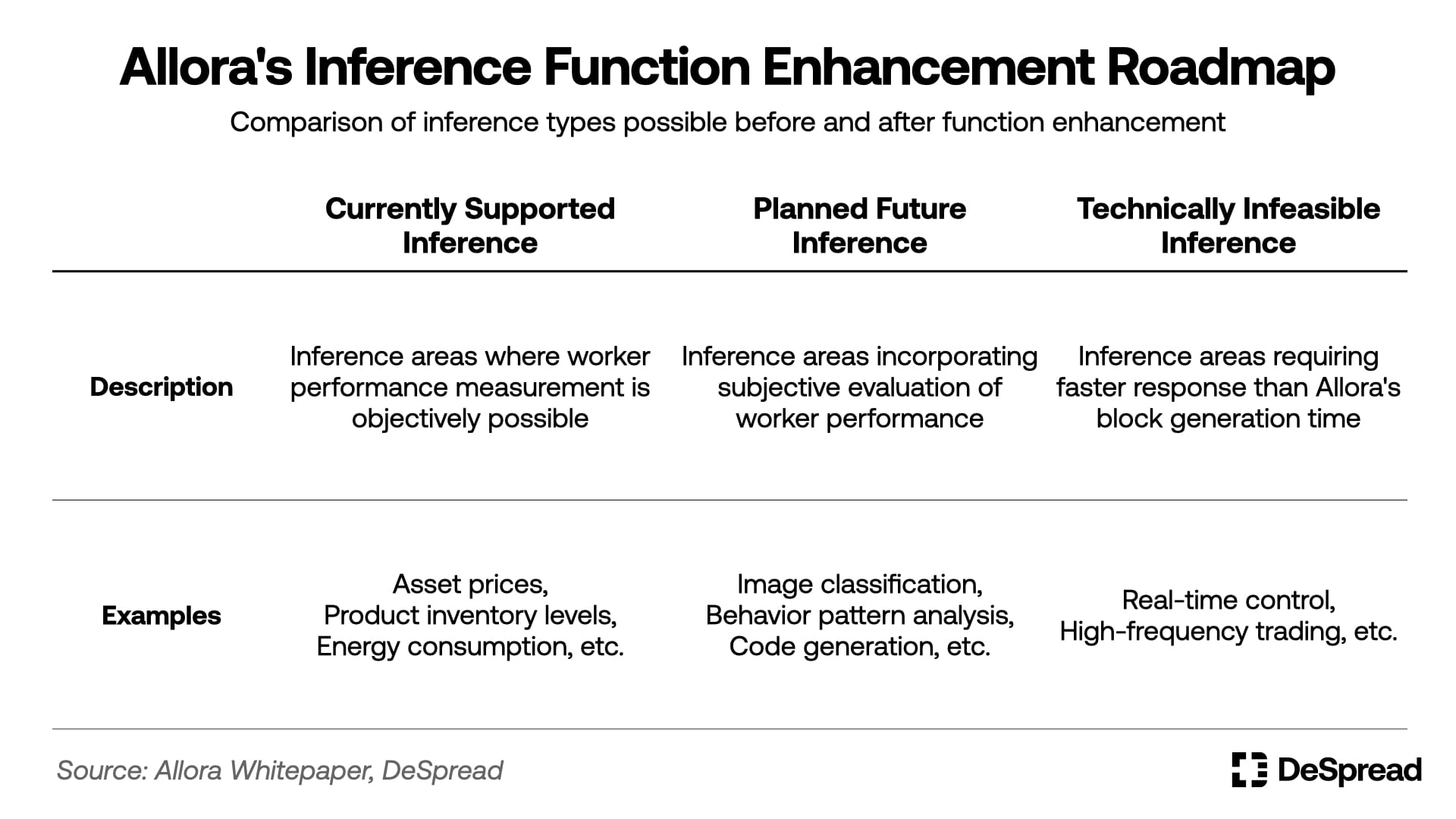

Allora is a decentralized inference network that predicts and provides future values for specific topics requested by various entities. There are two main approaches to implementing decentralized AI inference:

- Single Model/Distributed Processing: Building a decentralized single AI model by conducting model training and inference processes in a distributed manner

- Multi-Model/Inference Synthesis: Collecting inference results from multiple pre-trained AI models and synthesizing them to produce a single inference result

Between these two approaches, Allora adopts the multi-model/inference synthesis method, where AI model operators freely participate in the Allora network to execute inference for predictions requested on specific topics, and the protocol provides requesters with a single prediction result synthesized from the inference values derived by these operators.

When synthesizing inference values from AI models, Allora doesn't simply average the inference values derived by each model but derives inference values by assigning weights to each model. Subsequently, Allora compares the actual result value for that topic with the values derived by each model and executes self-improvement to increase inference accuracy by assigning higher weights and incentives to models that derive inference values similar to the actual result value.

Through this approach, Allora can execute inference more specialized to specific topics than decentralized AI built using the model/distributed processing method. To encourage more AI models to participate in the protocol, Allora provides the open-source framework Allora MDK(Model Development Kit) to help anyone build and easily deploy and utilize AI models.

Additionally, Allora provides two SDKs - Allora Network Python and TypeScript SDK - to users who want to utilize Allora's inference data, providing an environment where they can easily integrate and utilize the data provided by Allora in their services.

Thus, Allora aims to function as a middle layer connecting AI models with services/protocols that need inference data, providing AI model operators with opportunities to generate revenue through AI models while establishing itself as an infrastructure that provides unbiased data needed by services/protocols.

Let's examine Allora's detailed protocol architecture to understand how Allora works and its distinctive features.

2.1. Protocol Architecture

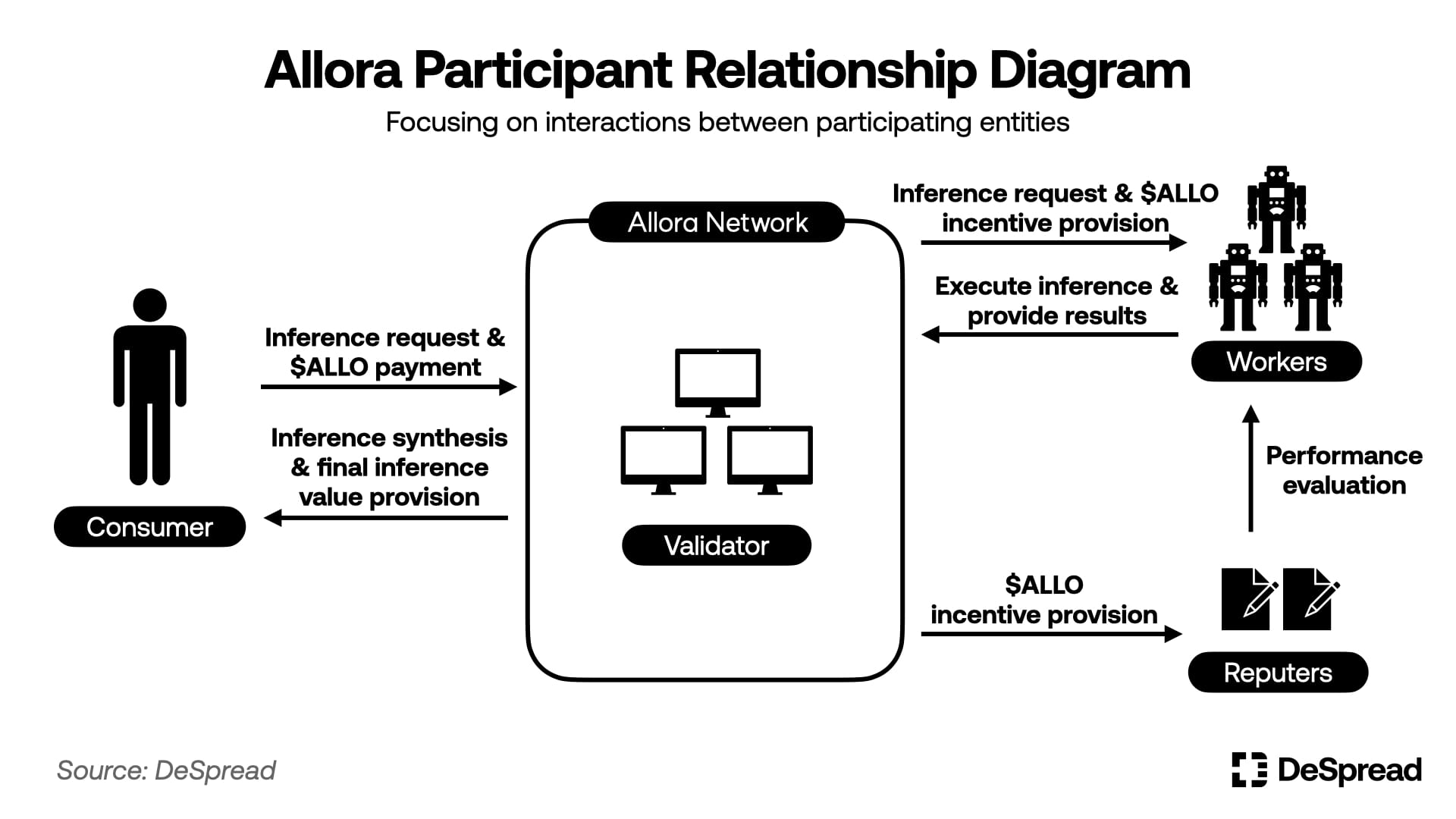

In Allora, anyone can set up and deploy specific topics, and four participating entities are involved in the process of executing inference and deriving final inference values for deployed topics:

- Consumers: Entities that pay fees to request inference for specific topics

- Workers: Entities that operate AI models using their databases and execute inference requested by consumers for specific topics

- Reputers: Entities that evaluate by comparing and contrasting data derived by workers with actual values

- Validators: Entities that operate Allora network nodes, processing and recording transactions generated by each participating entity

The Allora network has a structure that separates inference execution, evaluation, and validation entities, with the network token $ALLO at its center. $ALLO is used as inference request fees and incentives for inference execution, smoothly connecting network participants while also being staked to ensure security.

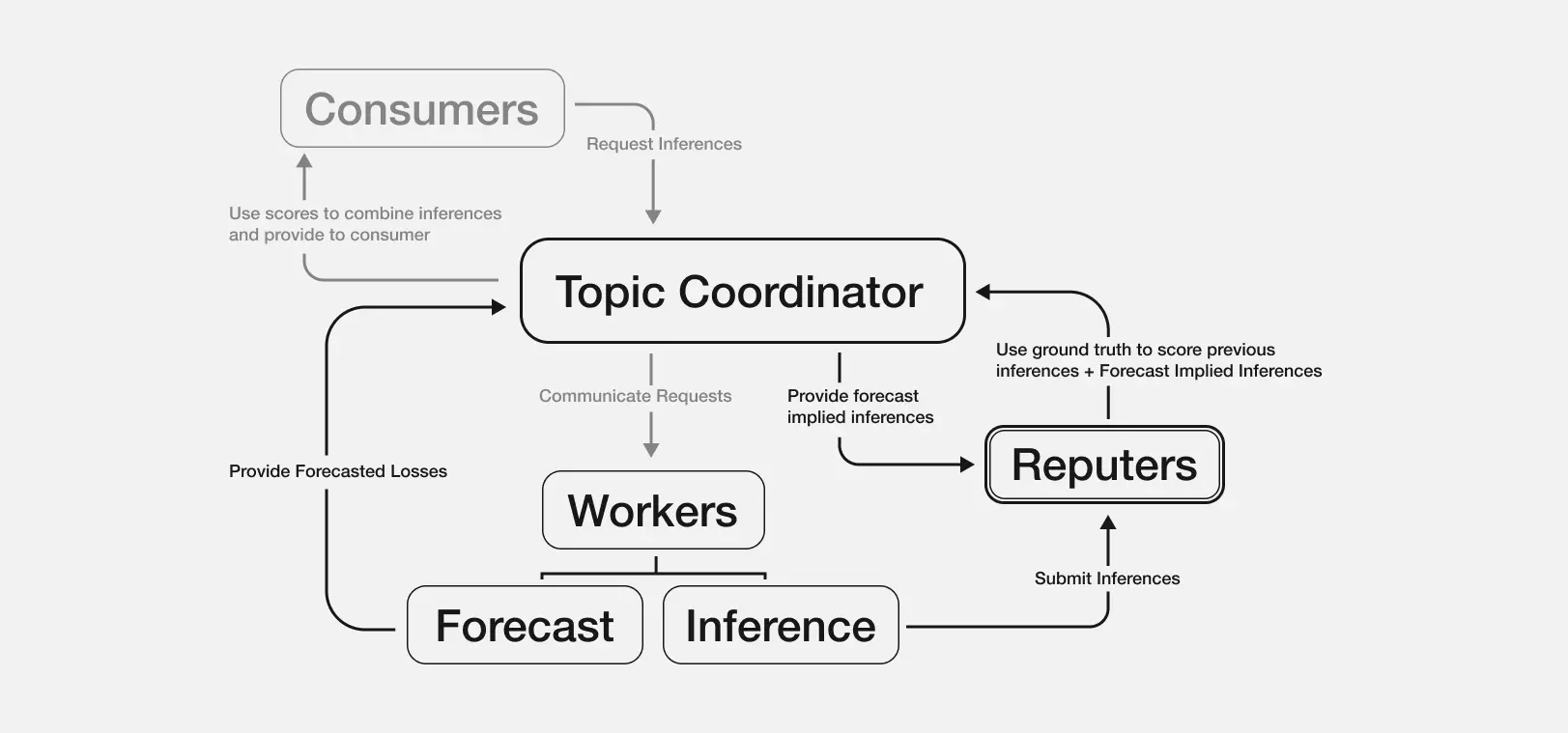

Looking at the Allora protocol from a functional perspective, we'll examine in detail the roles of each layer - the inference consumption layer, inference synthesis layer, and consensus layer - and the interactions between participants.

2.1.1. Inference Consumption Layer

The inference consumption layer handles interactions between protocol participants and the Allora protocol, including topic creation, topic participant management, and inference requests.

Users wishing to create a topic can interact with Allora's topic and inference management system, the Topic Coordinator, by paying a certain amount of $ALLO and setting up a rule set that defines what they want to infer along with how to verify actual results and evaluate inference values derived by workers.

Once a topic is created, workers and reputers can register themselves as inference participants for that topic by paying registration fees in $ALLO tokens. Reputers must additionally stake a certain amount of $ALLO in the topic, exposing themselves to asset slashing for malicious result validation.

After topics are created and workers and reputers are registered, consumers can request inferences by paying $ALLO to the topic, and workers and reputers receive these topic request fees as compensation for deriving inference values.

2.1.2. Forecast & Synthesis Layer

The forecast and synthesis layer is Allora's core layer for generating decentralized inferences, where workers execute inferences, reputers evaluate performance, and weight setting and inference synthesis take place based on these evaluations.

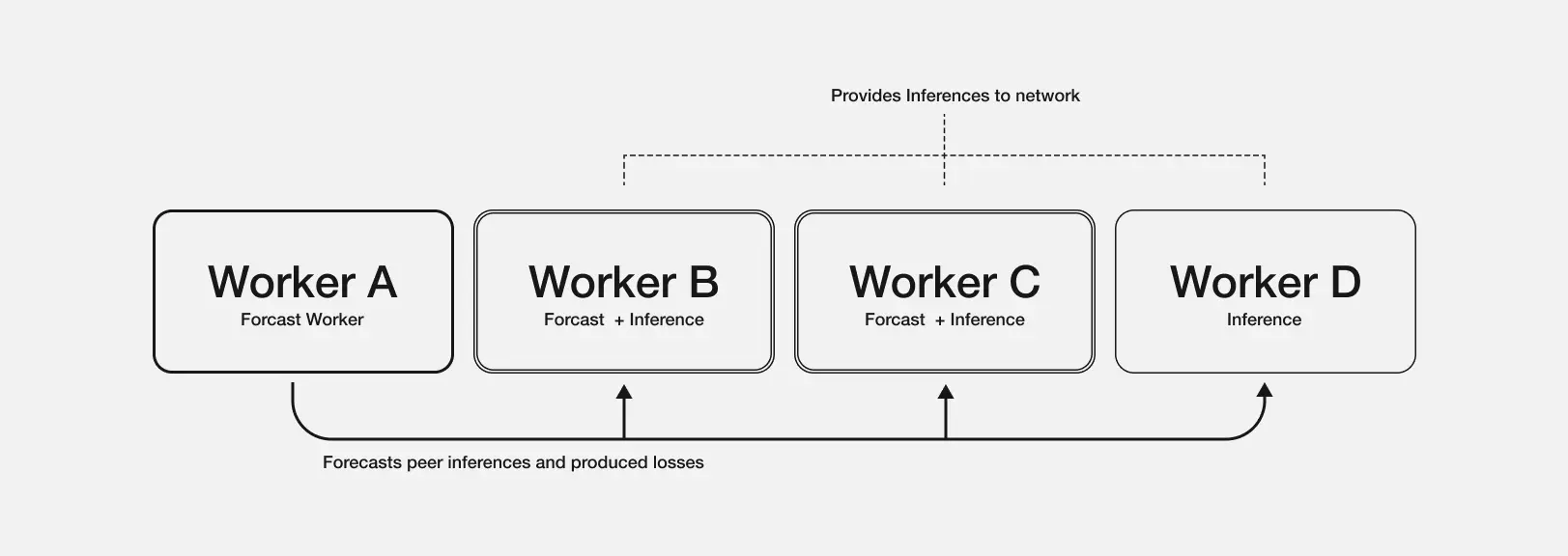

Workers in the Allora network not only submit inference values for topics requested by consumers but also evaluate the accuracy of other workers' inferences and derive Forecasted Losses based on these evaluations. These forecasted losses are reflected in weight calculations needed for inference synthesis, and workers receive higher incentives both when their inferences are accurate and when they accurately predict other workers' inference accuracy. Through this structure, Allora can derive inference synthesis weights that consider various contextual situations, not just workers' past performance.

For example, in a topic predicting Bitcoin's price one hour later, let's assume the following about workers A and B:

- Worker A: Has high average inference accuracy of 90% but shows reduced accuracy in volatile market conditions

- Worker B: Has average inference accuracy of 80% but maintains relatively high accuracy in volatile market conditions

If the current market is highly volatile and multiple workers predict "Worker B will have only about 5% error in this prediction due to their strength in volatile situations" while predicting "Worker A is expected to have about 15% error in this volatile situation," Allora will assign higher weight to Worker B's inference for this prediction despite their lower average historical performance.

The Topic Coordinator synthesizes inferences using the final weights derived through this process and provides the final inference value to the consumer. Additionally, during this process, confidence intervals are calculated and provided based on the distribution of inference values submitted by workers. Subsequently, reputers compare actual results with final inference values to evaluate each worker's inference performance and accuracy in predicting other workers' inference accuracy, adjusting workers' weights through consensus based on staking shares.

Allora conducts inference synthesis and evaluation through this method, and particularly, the 'context awareness' structure where each worker evaluates other workers' inference accuracy contributes to improving inference accuracy by enabling Allora to derive inference values optimized for each situation. Moreover, as workers' inference performance data accumulates, the context awareness function operates more efficiently, allowing Allora's inference function to self-improve more effectively.

Allora's consensus layer is where topic weight calculations, network reward distribution, and participant activity recording take place, built on the Cosmos SDK with CometBFT and DPoS (Delegated Proof of Stake) based consensus mechanisms.

Users can participate in the Allora network as validators by staking $ALLO tokens and operating nodes, receiving transaction fees submitted by Allora participants as compensation for operating the network and ensuring security. Even without operating nodes, users can receive these incentives indirectly by delegating their $ALLO to validators.

Additionally, Allora features $ALLO inflation incentives distributed to network participants, with 75% of newly unlocked and distributed $ALLO going to workers and reputers participating in topic inference, and the remaining 25% to validators. These inflation incentives cease once all $ALLO is issued and follow a structure where unlocked quantities gradually halve.

When the 75% inflation incentive is distributed to workers and reputers, the distribution ratio is determined not only by worker performance and reputer staking shares but also by topic weight. Topic weight is calculated based on the staking amounts of reputers participating in the topic and fee revenue, thereby incentivizing continued worker and reputer participation in topics with high demand and stability.

3. From On-chain to Various Industries

3.1. Allora Approaching Mainnet Launch

Allora established the Allora Foundation on January 10, 2025, and is accelerating toward mainnet launch after completing a public testnet with over 300,000 participating workers. As of February 6, they are conducting the Allora Model Forge Competition to select AI model creators for the Allora network following mainnet launch.

Additionally, Allora is establishing partnerships with various projects ahead of mainnet launch. The key partner projects and functionalities Allora provides to them are as follows:

- Plume: Providing RWA price feeds on the Plume network, real-time APY and risk prediction

- Story Protocol: Providing IP value assessment and potential insights, price feeds for illiquid on-chain assets, Allora inference for Story Protocol-based DeFi

- Monad: Providing price feeds for illiquid on-chain assets, Allora inference for Monad-based DeFi

- 0xScope: Supporting development of on-chain activity assistant AI Jarvis using Allora's context awareness capabilities

- Virtuals Protocol: Enhancing agent performance by integrating Allora inference with Virtual Protocol's G.A.M.E framework

- Eliza OS (formerly ai16z): Enhancing agent performance by integrating Allora inference with Eliza OS's Eliza framework

Currently, Allora's partnerships are primarily focused on AI/crypto projects, reflecting two key factors: 1) high demand for decentralized inference from crypto-based projects, and 2) easy access to on-chain data necessary for AI models to execute required inferences.

For the early mainnet launch, Allora is expected to allocate substantial inflation rewards to attract participants. To encourage continued activity from participants drawn by these inflation rewards, Allora needs to maintain appropriate value for $ALLO. However, since inflation rewards gradually decrease over time, the long-term challenge is generating sufficient network transaction fees through increased inference demand to incentivize continued protocol participation.

Therefore, to assess Allora's potential success, it's crucial to closely observe both Allora's short-term $ALLO value appreciation strategy and its long-term ability to secure stable fee revenue through inference demand.

4. Conclusion

As AI technology advances and proves its utility, AI inference adoption and implementation are actively progressing across most industries. However, the resource-intensive nature of AI adoption is widening the competitive gap between large corporations that have successfully implemented AI and smaller companies that cannot. In this environment, demand for Allora's capabilities - providing topic-optimized inferences and self-improving data accuracy through decentralization - is expected to gradually increase.

Allora aims to become a widely adopted decentralized inference infrastructure across all industries, but realizing this vision requires proving both functional effectiveness and sustainability. To demonstrate this, Allora needs to secure sufficient workers and reputers from initial mainnet launch and ensure sustainable incentives for these network participants.

If Allora successfully addresses these challenges and achieves adoption across various industries, it will not only demonstrate blockchain's potential as essential AI infrastructure but also serve as a key example showing how the combination of AI and blockchain - two technologies leading current IT industry - can deliver real value to humanity.